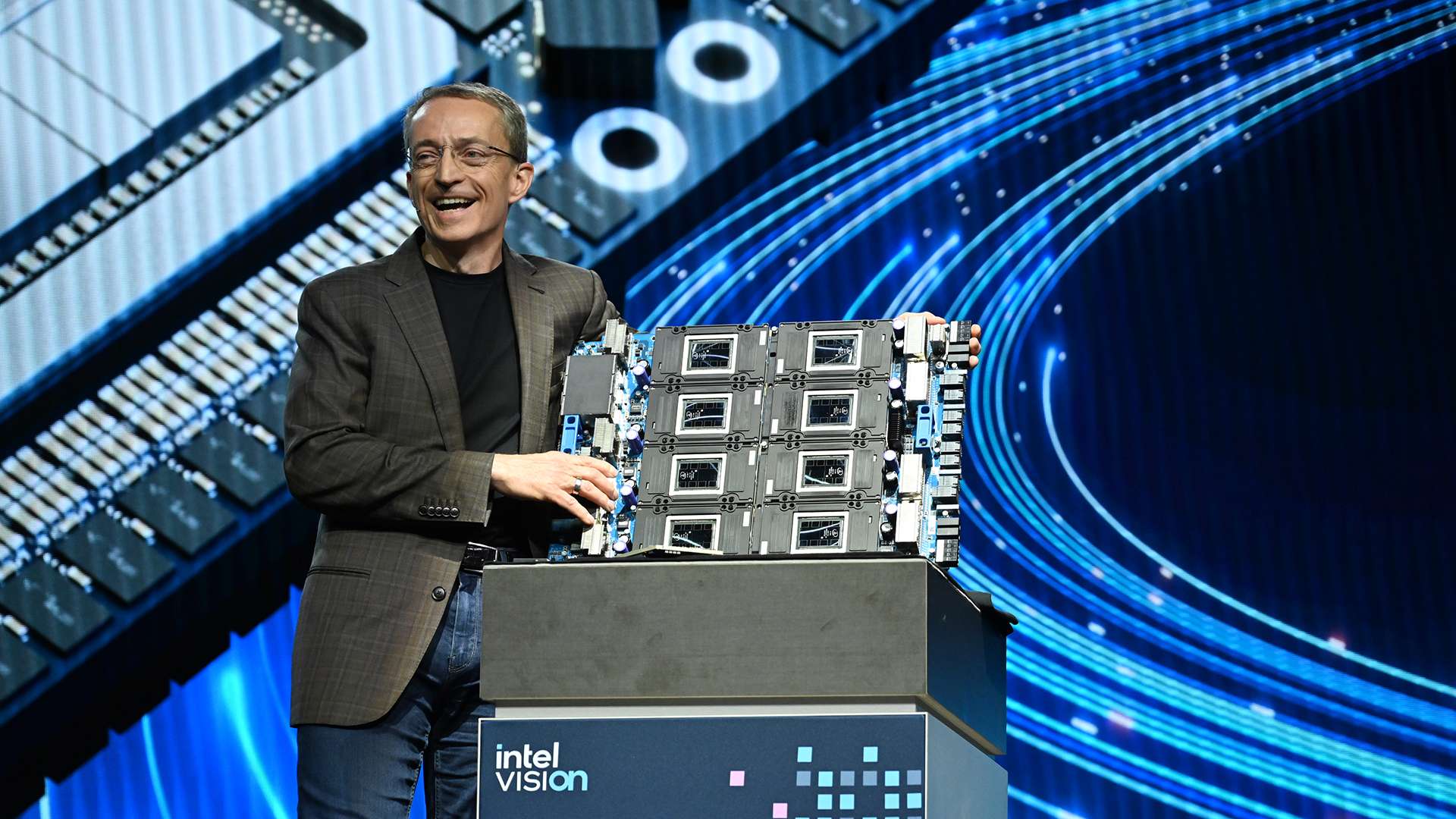

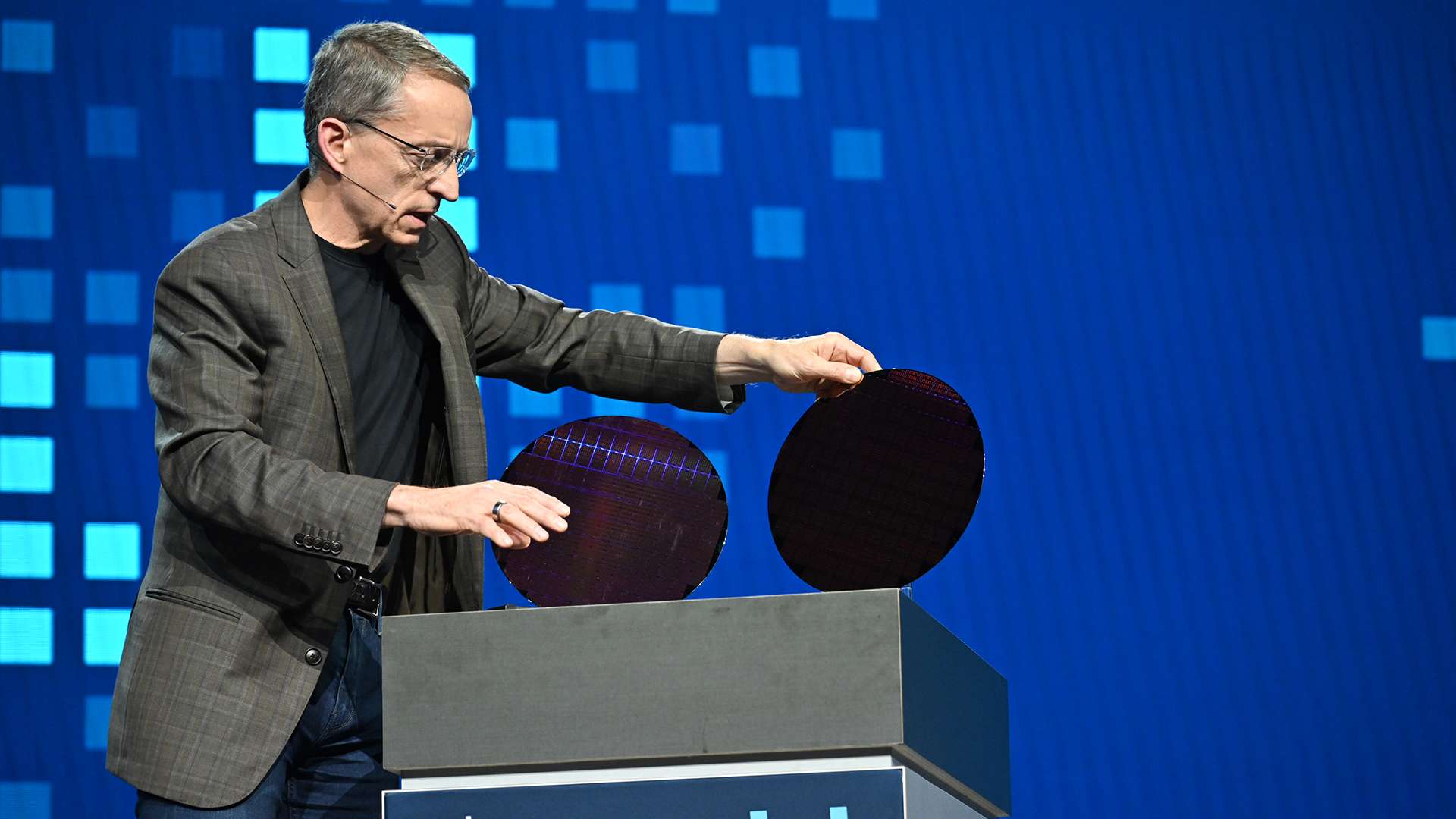

PHOENIX, April 9, 2024 – At its Vision 2024 conference, Intel introduced the Intel Gaudi 3 AI accelerator and unveiled a comprehensive strategy to bring openness, scalability and choice to enterprises deploying generative AI (GenAI).

The Intel Gaudi 3 accelerator promises major performance gains over previous generations. It will deliver up to 4x more AI compute for BF16 operations and 1.5x increase in memory bandwidth compared to its predecessor Gaudi 2. Compared to Nvidia's H100, Gaudi 3 is projected to deliver 50% faster time-to-train on average across large language models like Llama2 (7B and 13B parameters) and GPT-3 175B. For inference, it is projected to outperform H100 by 50% on average throughput and 40% better power efficiency on models like Llama (7B and 70B parameters) and Falcon 180B.

Intel announced that original equipment manufacturers (OEMs) Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro will offer Gaudi 3-based systems starting in Q2 2024, broadening the AI data center offerings for enterprises. The company also highlighted a broad set of enterprise customers and partners like NAVER, Bosch, IBM, Ola/Krutrim, NielsenIQ, Seekr, IFF, CtrlS, Bharti Airtel, Landing AI, Roboflow and Infosys that are deploying or exploring Intel AI solutions powered by Xeon CPUs and Gaudi accelerators.

As part of its open systems approach, Intel revealed plans to create an open platform for enterprise GenAI in collaboration with SAP, Red Hat, VMware, and other industry leaders. The open, multivendor platform aims to deliver best-in-class ease of deployment, performance, and value. It will be enabled by retrieval-augmented generation (RAG), which allows enterprises to augment their vast proprietary data sources running on standard cloud infrastructure with open large language model capabilities.

Intel provided updates on its full AI roadmap across CPUs, GPUs, edge, and connectivity solutions. Highlights include next-gen Xeon 6 data center CPUs, with new power-efficient E-cores projected to deliver 2.4x better performance per watt, and P-cores adding AI acceleration like MXFP4 format support for running large models up to 70B parameters. On the client side, Intel expects to ship 40 million AI-enabled PCs in 2024, with future processors packing over 100 TOPS and 45 NPU TOPS for advanced AI capabilities. For networking, Intel is leading the open Ultra Ethernet Consortium to develop Ethernet solutions optimized for scaling massive AI fabrics and clustering thousands of accelerators.

The company also unveiled its Intel Tiber portfolio to streamline the deployment of enterprise software and services, including GenAI solutions, with a unified experience for finding solutions that fit customer needs while addressing security, compliance and performance requirements.

About Intel

Intel (Nasdaq: INTC) is an industry leader, creating world-changing technology that enables global progress and enriches lives. Inspired by Moore’s Law, we continuously work to advance the design and manufacturing of semiconductors to help address our customers’ greatest challenges. By embedding intelligence in the cloud, network, edge and every kind of computing device, we unleash the potential of data to transform business and society for the better. To learn more about Intel’s innovations, go to newsroom.intel.com and intel.com.

© Intel Corporation. Intel, the Intel logo and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Are you interested in buying Intel Products?